Read Parquet File Python Without Pandas

Any valid string path is acceptable. If you are reading from a file eg.

Real Time End To End Integration With Apache Kafka In Apache Spark S Structured Streaming Apache Spark Data Science Apache Kafka

Valid URL schemes include http ftp s3 gs and file.

Read parquet file python without pandas

. Import pandas as pd def write_parquet_file. Reading a Parquet File from Azure Blob storage The code below shows how to use Azures storage sdk along with pyarrow to read a parquet file into a Pandas dataframe. Using PyArrow with Parquet files can lead to an impressive speed advantage in terms of the reading speed of large data files. A CSV or Parquet file often your data will never be loaded into an external database system at all and will instead be directly loaded into a Pandas DataFrame.The string could be a URL. There was a bug introduced in pandas 104 that caused pdread_parquet to no longer be able to handle file-like objects. Apache Arrow is gaining significant traction in this domain as well and DuckDB also quacks Arrow. This package aims to provide a performant library to read and write Parquet files from Python without any need for a Python-Java bridge.

You can vote up the ones you like or vote down the ones you dont like and go to the original project or source file by following the links above each example. If you want to get a buffer to the parquet content you can use a ioBytesIO object as long as you dont use partition_cols which creates multiple files. This is suitable for executing inside a Jupyter notebook running on a Python 3 kernel. Aside from pandas Apache pyarrow also provides way to transform parquet to dataframe.

BytesIO df. From fastparquet import ParquetFile pf ParquetFilemyfileparq df pfto_pandas df2 pfto_pandas col1 col2 categoriescol1 You may specify which columns to load which of those to keep as categoricals if the data uses dictionary encoding. Nmerket mentioned this issue on Jun 9 2020. It will create python objects and then you will have to move them to a Pandas DataFrame so the process will be slower than pdread_csv for example.

Because doing machine learning implies trying many options and algorithms with different parameters from data cleaning to model validation the Python programmers will often load a full dataset into a Pandas dataframe without actually modifying the stored data. There is a python parquet reader that works relatively well. This walkthrough will cover how to read Parquet data in Python without then need to spin up a cloud computing cluster. Since it was developed as part of the Hadoop ecosystem Parquets reference implementation is written in Java.

How to best do this. Import io f io. Arrow Parquet reading speed. Over the last year I have been working with the Apache Parquet community to build out parquet-cpp a first class C Parquet file readerwriter implementation suitable for use in Python and other data applications.

The code is simple just type. Now we can write two small chunks of code to read these files using Pandas read_csv and PyArrows read_table functions. This change will skip 104 and use the newer version when available. To_parquet f f.

Import pyarrowparquet as pq df pqread_tablesourceyour_file_pathto_pandas. This blog is a follow up to my 2017 Roadmap. Df pdread_csvdataus_presidentscsv dfto_parquettmpus_presidentsparquet write_parquet_file. Uwe Korn and I have built the Python interface and integration with pandas within the Python codebase pyarrow in Apache Arrow.

Currently Im using the code below on Python 35 Windows to read in a parquet file. Get code examples like csv to parquet python pandas instantly right from your google search results with the Grepper Chrome Extension. This problem only occurs when importing PyTorch and seems to be related to 11326 as 12739 seems to resolve it at. Bug When importing PyTorch and reading a parquet file with pandas the script randomly fails with a segmentation fault.

Im using both Python 27 and 36 on Windows. Many of the most recent errors appear to be resolved by forcing fsspec051 which was released 4 days ago. For file URLs a host is expected. To follow along all you need is a base version of Python to be installed.

About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy Safety How YouTube works Test new features Press Copyright Contact us Creators. As with AWS panadsread_parquet wont work with a parquet folder of one or more files partitioned or not on GCS. Loading data into a Pandas DataFrame a performance study. Read_parquet path engine auto columns None storage_options None use_nullable_dtypes False kwargs source Load a parquet object from the file path returning a DataFrame.

You can easily read this file into a Pandas DataFrame and write it out as a Parquet file as described in this Stackoverflow answer. Parameters path str path object or file-like object. It can easily be done on a single desktop computer or laptop if you have Python installed without the need for Spark and Hadoop. We also monitor the time it takes to read the file and compare them in the form of a ratio.

Ive also experienced many issues with pandas reading S3-based parquet files ever since s3fs refactored the file system components into fspsec. This will make the Parquet format an ideal storage mechanism for Python-based big data workflows. The following are 30 code examples for showing how to use pandasread_parquetThese examples are extracted from open source projects. Seek 0 0 content f.

Import pandas as pd parquetfilename File1parquet parquetFile pdread_parquetparquetfilename columnscolumn1 column2 However Id like to do so without using pandas. This version of Python that was used for me is Python 36. First we are. Otherwise s3fs was resolving to fsspec 040 using conda for me without other constraints.

Theyre fixing it in 105. Import gcsfs import pyarrowparquet as pq Add credentials or rely on gcloud CLI setup gs gcsfsGCSFileSystem ds pqParquetDatasetgsanalytics filesystemgs df dsread_pandasto_pandas.

Cannot Read Parquet File From Pandas Issue 1645 Databricks Koalas Github

Using Pyarrow To Read Parquet Files Written By Spark Increases Memory Significantly Stack Overflow

Spark Read And Write Apache Parquet Sparkbyexamples

Essential Cheat Sheets For Machine Learning And Deep Learning Engineers Data Science Data Science Learning Machine Learning

Pandas Missing Read Parquet Function In Azure Databricks Notebook Stack Overflow

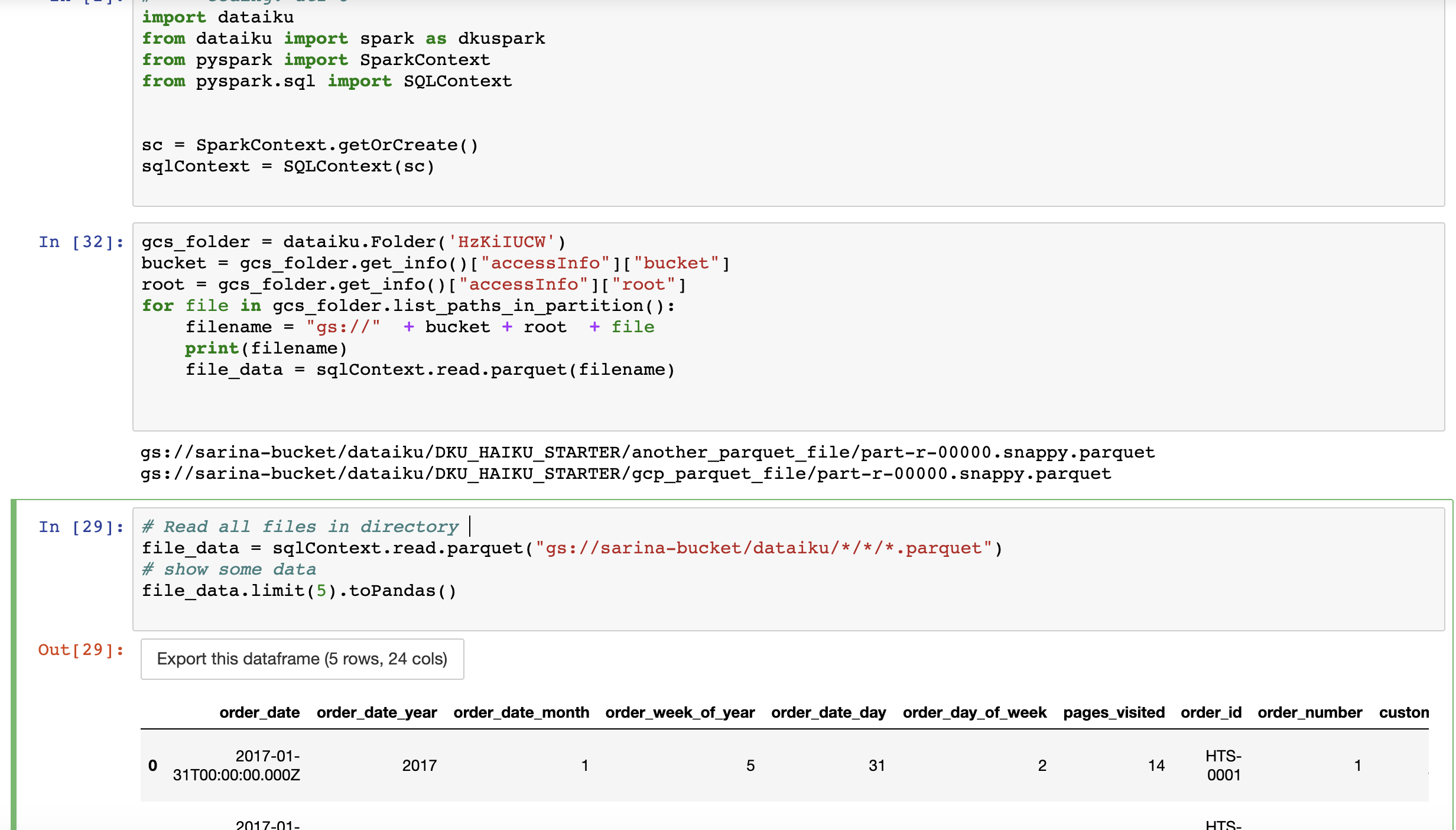

Solved How To Read Parquet File From Gcs Using Pyspark Dataiku Community

Pandas Missing Read Parquet Function In Azure Databricks Notebook Stack Overflow

Error Reading Parquet Files Created Using Pandas To Parquet Issue 38 Juliaio Parquet Jl Github

Post a Comment for "Read Parquet File Python Without Pandas"